Vector graphics are widely used in digital art and highly favored by designers due to their scalability and layer-wise properties. However, the process of creating and editing vector graphics requires creativity and design expertise, making it a time-consuming task. Recent advancements in text-to-vector (T2V) generation have aimed to make this process more accessible. However, existing T2V methods directly optimize control points of vector graphics paths, often resulting in intersecting or jagged paths due to the lack of geometry constraints.

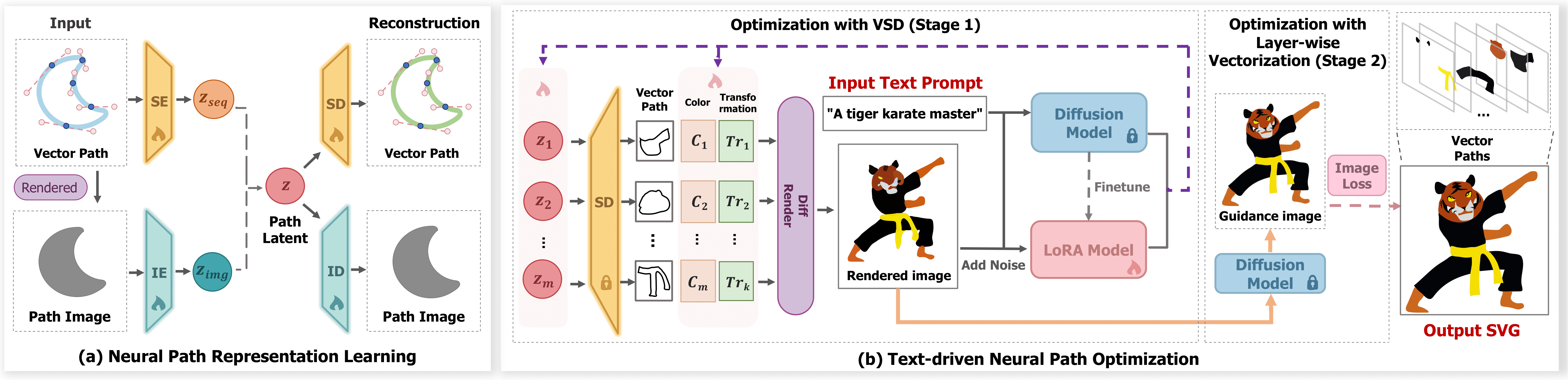

To overcome these limitations, we propose a novel neural path representation by designing a dual-branch Variational Autoencoder (VAE) that learns the path latent space from both sequence and image modalities. By optimizing the combination of neural paths, we can incorporate geometric constraints while preserving expressivity in generated SVGs. Furthermore, we introduce a two-stage path optimization method to improve the visual and topological quality of generated SVGs. In the first stage, a pre-trained text-to-image diffusion model guides the initial generation of complex vector graphics through the Variational Score Distillation (VSD) process. In the second stage, we refine the graphics using a layer-wise image vectorization strategy to achieve clearer elements and structure.

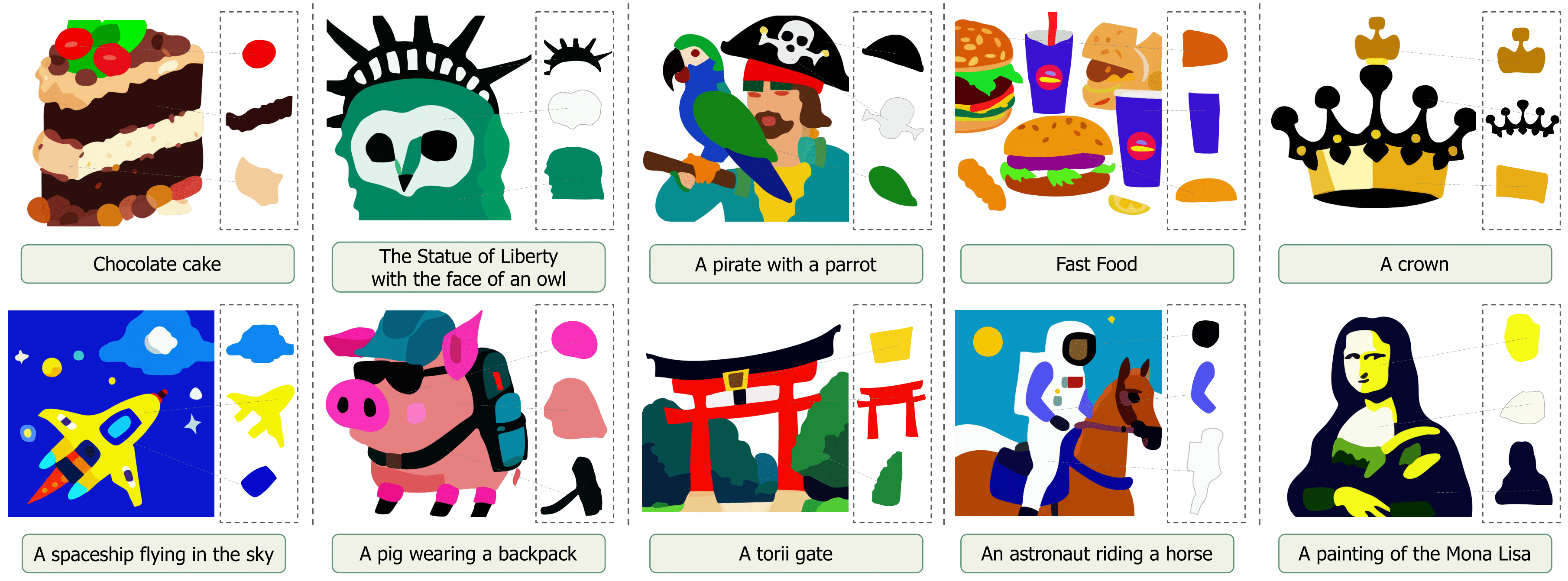

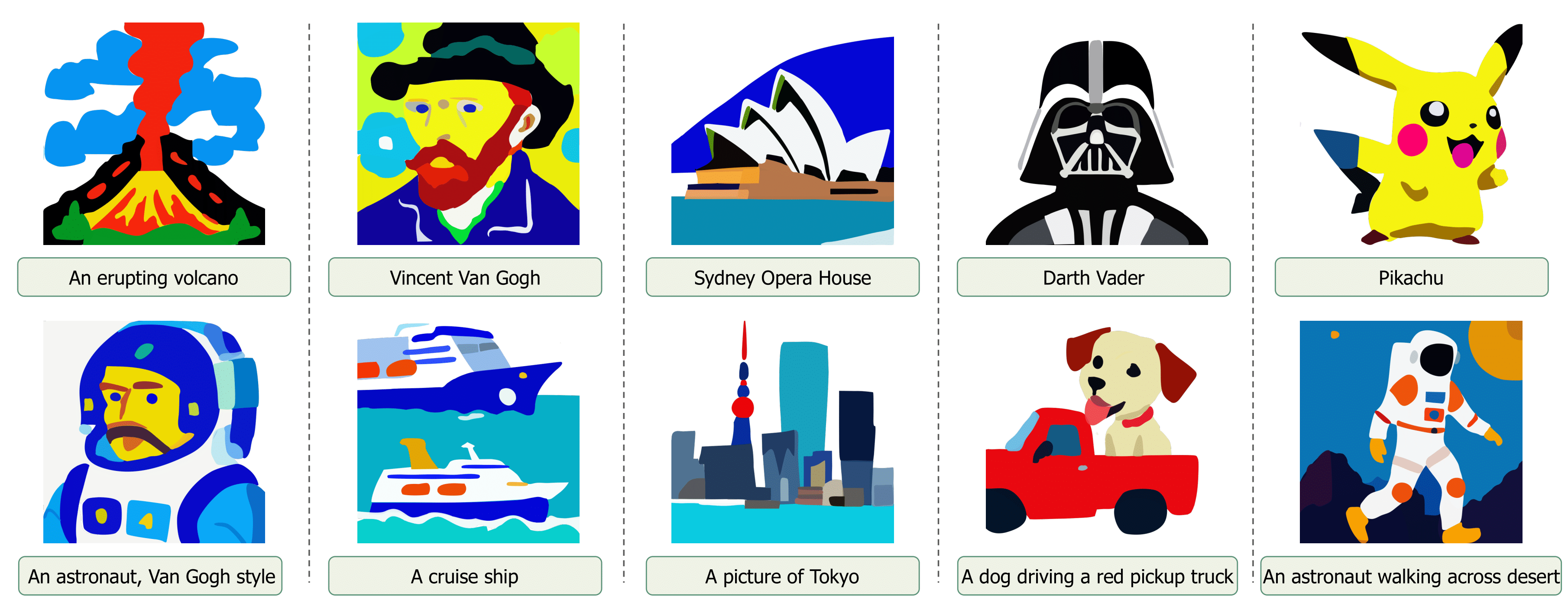

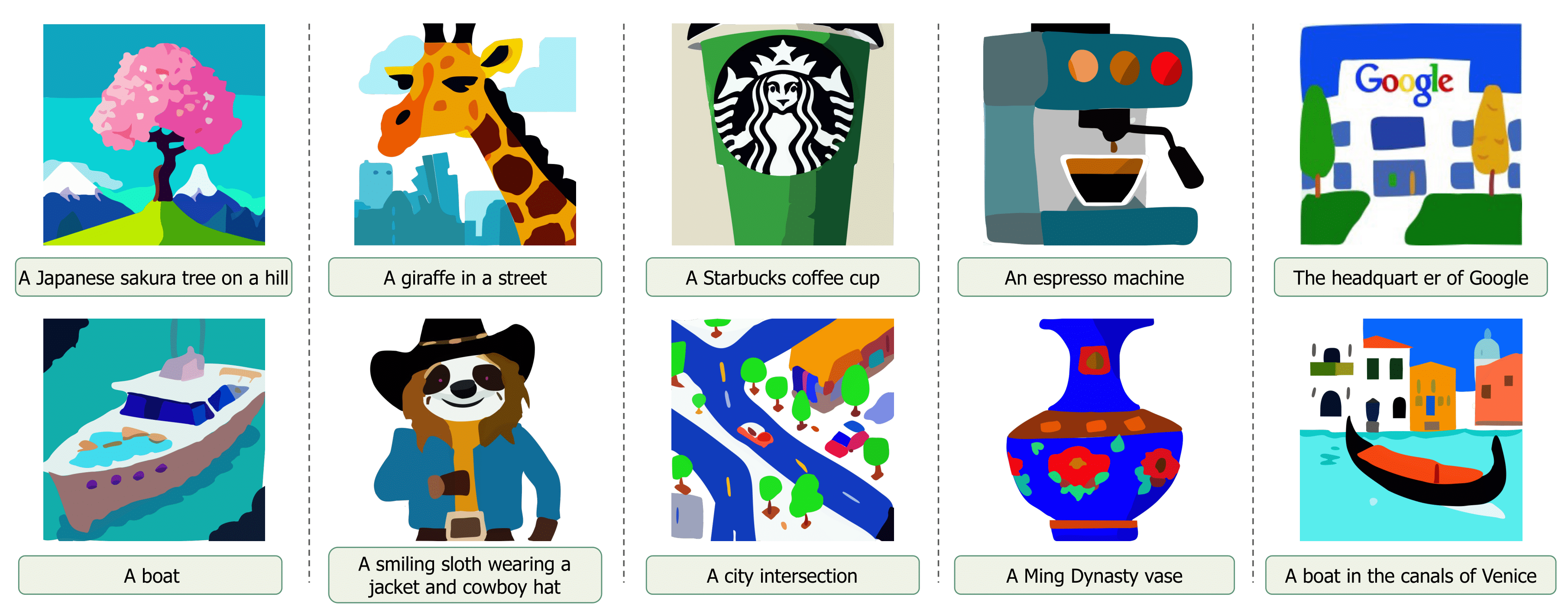

We demonstrate the effectiveness of our method through extensive experiments and showcase various applications.

Our pipeline starts with learning a neural representation of paths by training a dual-branch VAE that learns from both the image and sequence modalities of paths. Next, we optimize the SVG, represented with neural paths, to align with the text prompt, which is achieved through a two-stage path optimization process. In the first stage, we utilize a pre-trained diffusion model as a prior to optimize the combination of neural paths aligned with the text. In the second stage, we apply a layer-wise vectorization strategy to optimize path hierarchy.

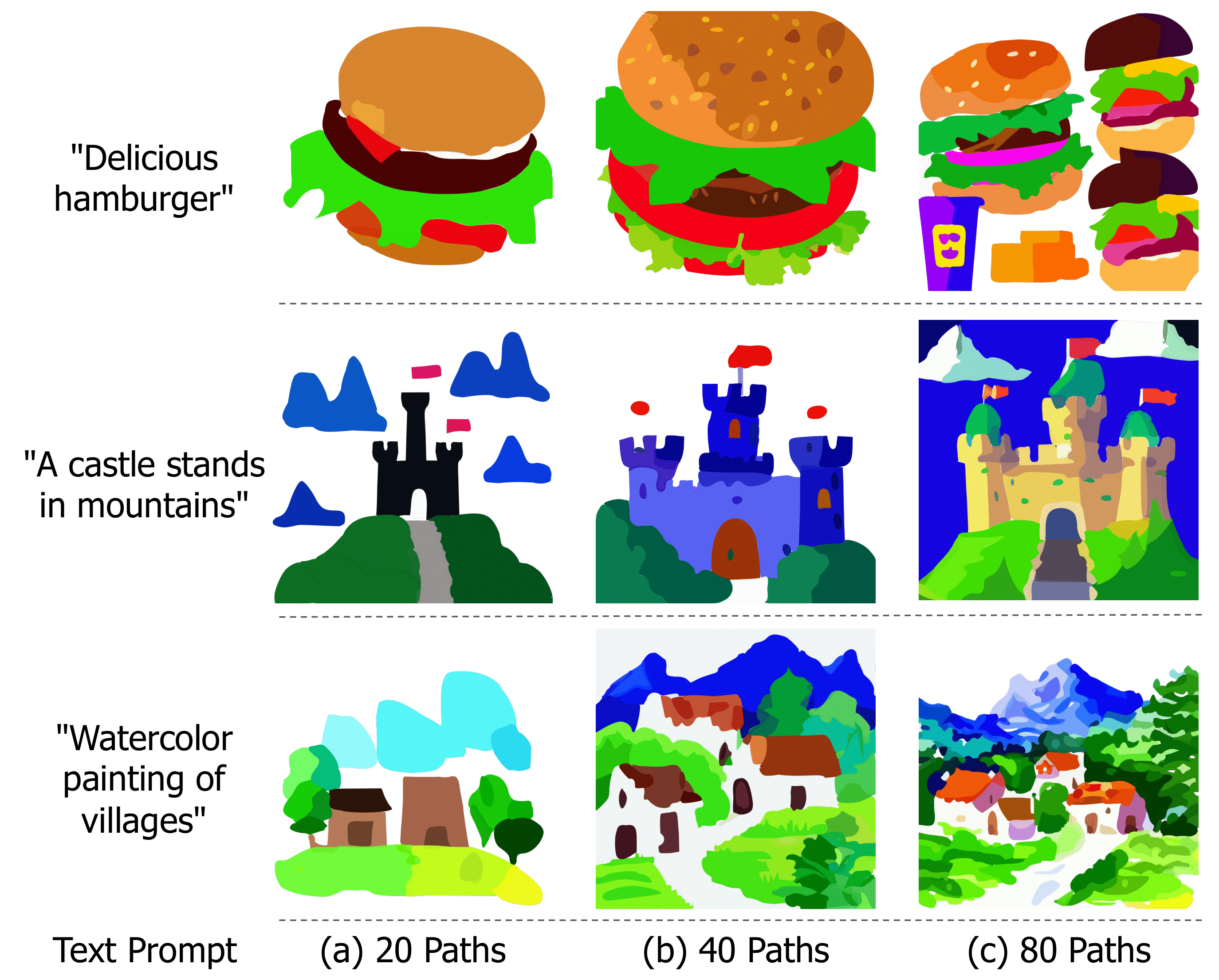

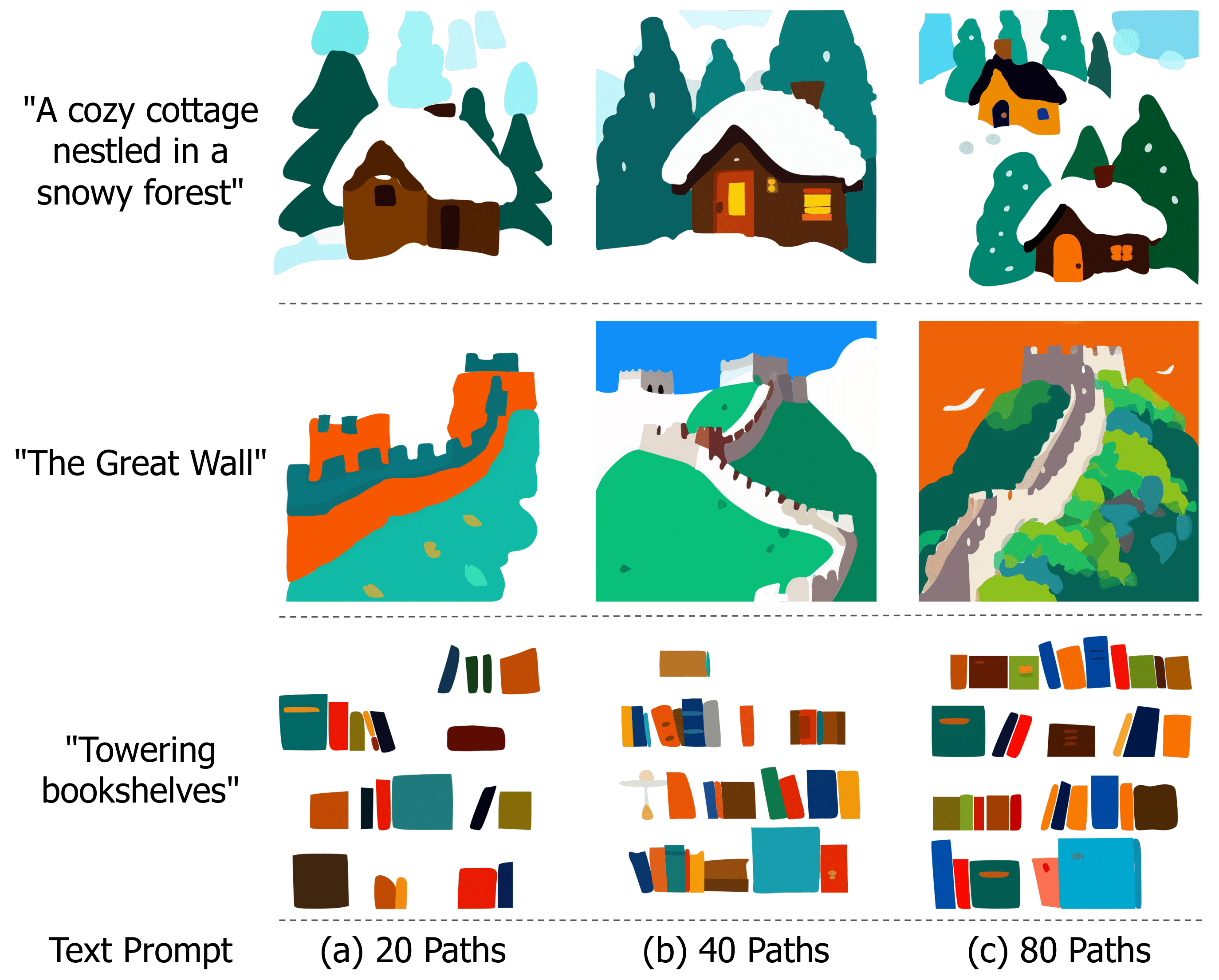

Our method can generate SVGs with varying levels of abstraction by adjusting the number of paths. Using fewer paths produces SVGs with a simpler and flatter style, while increasing the number of paths adds more detail and complexity.

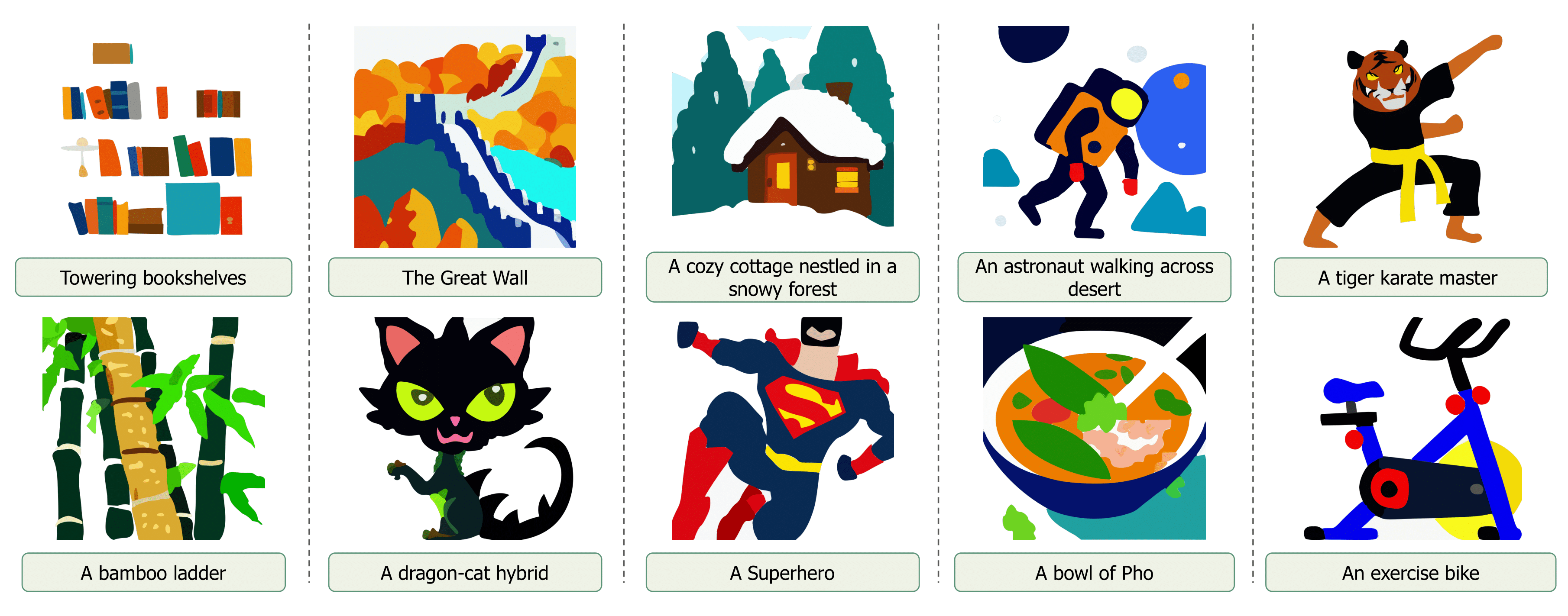

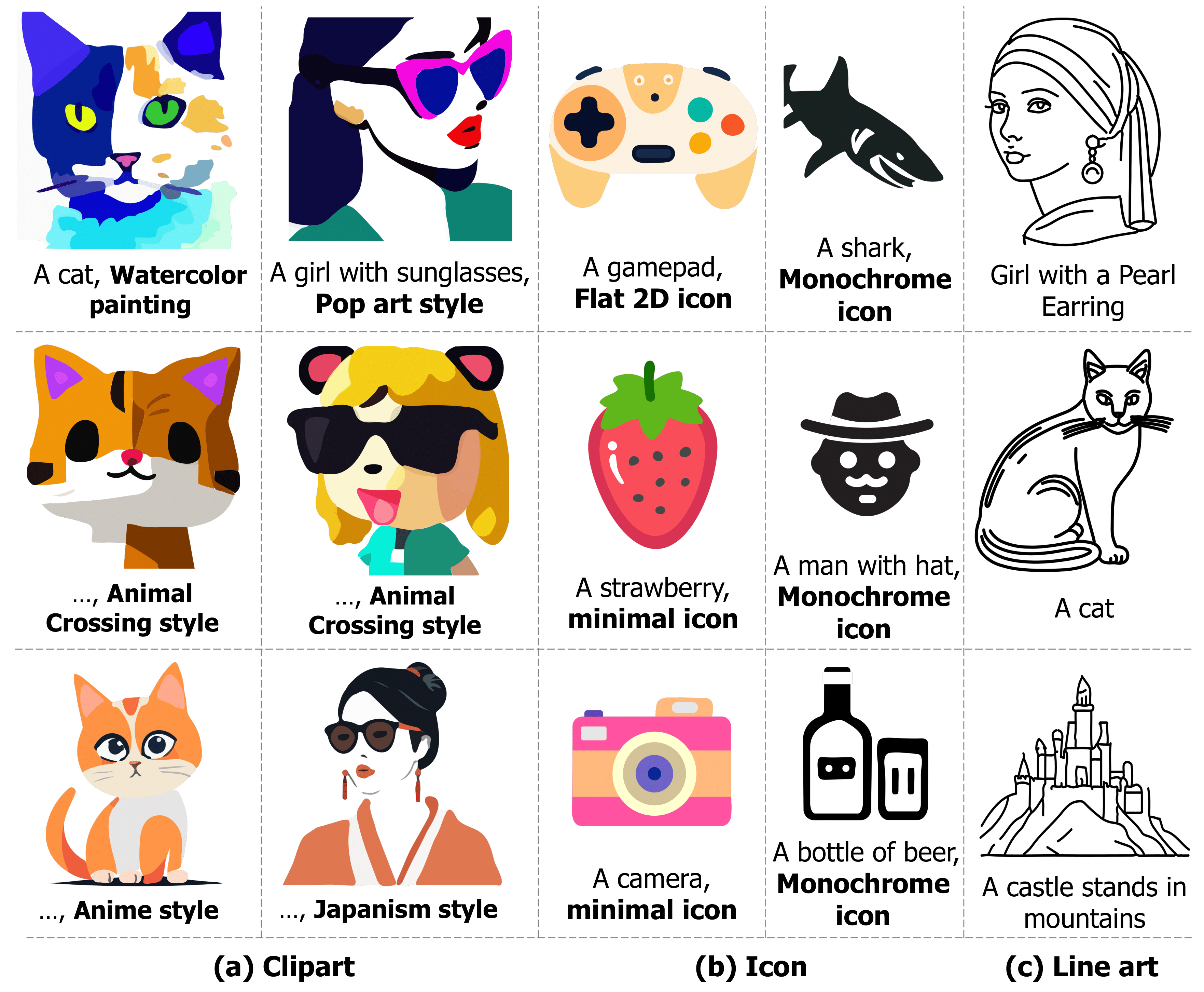

Our method can generate vector graphics with diverse styles by modifying style-related keywords in the text prompts, or by constraining path parameters such as fill colors and the number of paths.

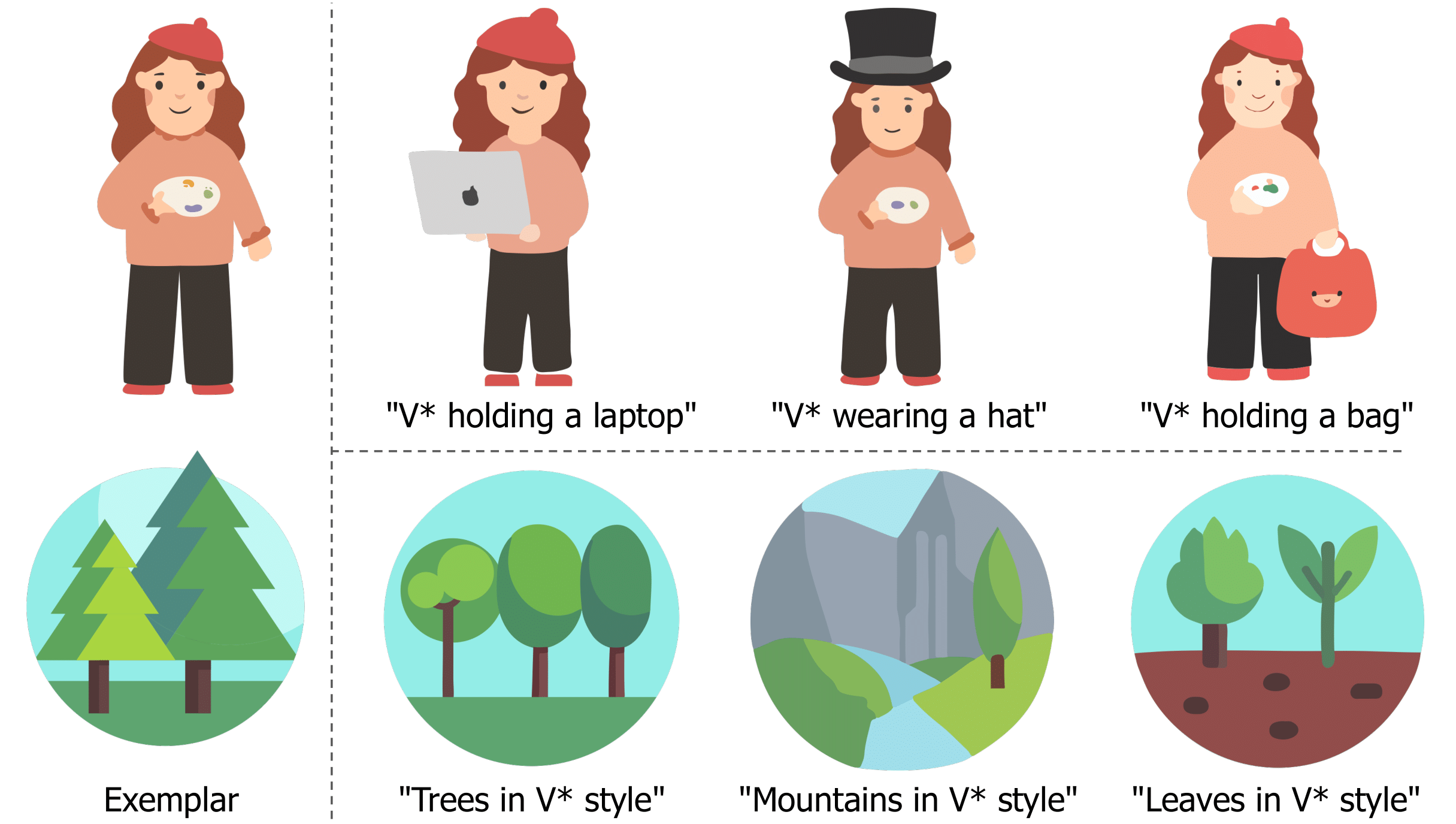

Given an exemplar SVG, our method can customize the SVG based on text prompts while preserving the visual identity of the exemplar.

Our method can generate vector icons from natural images.

Our framework can be extended to SVG animation by animating an initial SVG according to a text prompt describing the desired motion.